How adopting GitOps made our lives easier

GitOps is a buzzword, but it's also a concept which simply just works. In this article, I'll share the story of how and why it worked for my Kubernetes Infrastructure team.

What was it like before GitOps?

I think back to the pre-GitOps dark ages of my team's history.

The year was 2019.

Since we were running the typical Fury stack, consisting of Terraform, Ansible, and Kustomize, we already had Infrastructure As Code (IaC) nailed down.

We maintained the desired state of the infrastructure we managed in our infrastructural git repository, which we called the infra repo.

But yet, there was something missing, and things just didn't feel right.

The infra repo supposedly defined the desired state via the IaC principle.

But wait, what if the clusters were running something else that isn't currently persisted in the infra repo? And worse yet, what if I'd actually be breaking something by applying the "desired state" of the infra repo? This was a very legitimate concern.

By applying the Kubernetes manifests from the infra repo, I could be undoing someone's work, or even straight up breaking some important deployment.

In fact, this quite often happened, leading to a bunch of minor incidents and conflicts.

So, we started fighting against such cases the only way that made sense at the time.

Before applying manifests from the infra repo, we would run kubectl diff to carefully examine the differences between the manifests in the cluster, and the infra repo.

This actually sort of worked – kubectl diff allowed us to ensure we weren't breaking anything, by adding an additional validation step.

Besides that, we did our best to encourage all engineers to persist their changes as code, with moderate success.

In this way, we were able to function, but as you can imagine, it wasn't really optimal.

On the Ansible side, we suffered from the same problem, but objectively much worse.

It's reasonably possible to properly understand the differences between the Kubernetes manifests in the cluster and in the infra repo.

But it could be near impossible to comprehend the divergencies between a virtual machine, and an Ansible playbook that's supposed to define said virtual machine.

You can't ever be certain that running an Ansible playbook is harmless.

In fact, it's reasonably possible that running an Ansible playbook would undo some manual fix, leading to a huge incident.

Or perhaps the playbook could simply not be idempotent, thus doing harm if launched repeatedly.

This leads you down a terrifying downward spiral.

- You'd rather perform a configuration change manually than utilize the Ansible playbook, because you don't trust the playbook.

- In order to "maintain" the playbook, you then blindly add your manually performed step to the playbook.

- As a result of this action, you've just made the playbook even less trustworthy.

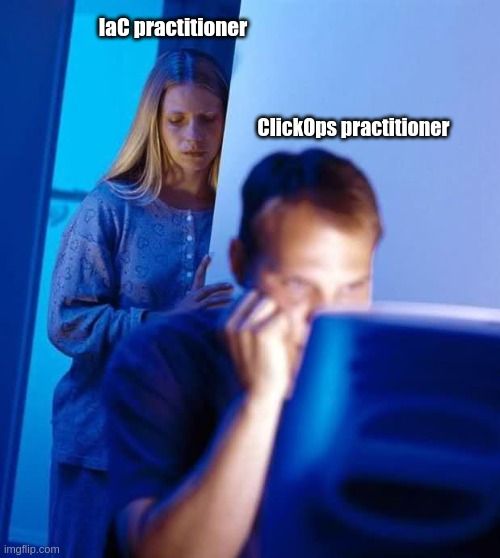

Clearly, the root problem is lack of trust, be it either trust among team members, or trust in the persisted IaC.

At best, such a lack of trust introduces a heavy mental burden to every single activity your team will ever do with your infrastructure.

At worst, if left unchecked, it could cause very serious incidents, and completely freeze you from "touching things that are better left untouched".

I believe the most simple, natural solution to this lack of trust is GitOps.

How things got better with GitOps

Introducing Flux

Near the end of 2019, I was introduced to the world of GitOps when we installed Flux.

Flux is actually a really simple tool when it comes down to it – at least the way we decided to use it.

Inside each Kubernetes cluster is a Flux pod, which periodically clones the latest infra repo state, and applies relevant manifests to the cluster.

Perhaps surprisingly, this was precisely what we needed, and the benefits were immediately tangible.

We now had confidence that whatever was present in the infra repo was also present inside the cluster.

- No more

kubectl diff, since there are no unexpected diffs anymore. - No fear of undoing anyone's work anymore, because if Flux is running, it would have already undone their work anyway.

- No need to convince anyone to utilize IaC, because with Flux in play, they have no choice anymore.

Flux single-handedly fixed all these problems at almost no cost!

Furthermore, there are some extra benefits.

- Imagine that someone deletes something important from the cluster, perhaps by accident. Thanks to Flux, the undesired change should be automatically corrected within minutes. This provides us with an enhanced sense of safety.

- Since we have many clusters hooked up to the infra repo, GitOps saves us a lot of work by deploying the manifests to all clusters. Instead of applying something to all of my clusters manually, I can test it in one of them, and then utilize Flux to deploy it to all the other clusters.

Flux: disabling & enabling

However, sometimes, we actually want to temporarily disable Flux.

For example, we want to test a feature branch that isn't yet worth pushing to master – that makes sense.

No problem - Flux is easily disabled. Uh-oh.

In the first few months after adopting Flux, we saw situations where team members were "testing a feature branch". Quite often, Flux would be disabled.

In the worst cases I've seen, it was disabled for weeks at a time.

This presented itself as an interesting problem.

- In order to merge a feature into the master branch, the feature must be properly tested.

- In order to properly test a feature, it must be deployed to a cluster.

- In order to deploy the feature to a cluster, Flux must be disabled (in some cases).

- Therefore, in order to test feature branches, Flux must be disabled.

As you can imagine, the benefits offered by Flux were hindered by Flux often being disabled for testing purposes.

As before, you could argue that this is a problem of culture and workflows, and I'd agree.

But once again, we discovered a mechanical solution, in a very surprising and unexpected way, more or less by accident.

We created a cronjob that enables Flux every morning.

At first, we were even doubtful of this being correct – what if someone purposefully scales down Flux for testing purposes, and the cronjob interferes?

But we went with it, and soon found out that we've struck gold.

Since that cronjob was introduced, we've never again had an issue with Flux being disabled for extended periods of time.

We've found it to be a great incentive for proper workflow.

The psychology behind it is quite simple.

- If your WIP is important, you'll disable Flux again tomorrow in order to reapply your WIP, or you merge it to master that same day.

- If your WIP is not important, you don't care if it's wiped from the cluster by Flux in the morning.

Thanks to the Flux-enabling cronjob, our workflow goes something like this:

- A feature branch with a change is created.

- The feature branch is properly tested, sometimes by manually applying it to the cluster, while disabling Flux.

- We properly review the feature branch and observe the behavior inside the cluster.

- We merge the feature branch into master.

- Thanks to the Flux-enabling cronjob, we don't need to worry about ensuring Flux runs often enough anymore.

Flux: garbage collection

Soon after, we started utilizing the Flux garbage collection feature.

We've found it to constitute an amazing productivity boost that everyone loves.

Because of that, I think it deserves a little spot inside this article.

Flux garbage collection works as follows:

- When deploying a resource, Flux deploys it with an extra annotation for tracking purposes.

- If Flux finds a resource that has this extra annotation, but isn't present in the infra repo anymore, Flux deletes this resource from the cluster.

Commonly, you'd utilize it by deleting a manifest from the infra repo, and Flux would delete the manifest from the clusters.

Therefore, it works quite conservatively, with no risk of doing anything undesirable.

And yet, it's extremely useful.

We've found it to be useful for this specific set of use cases:

- Classic "garbage collection" of resources that aren't needed anymore.

- Complex upgrades of tools, such as cert-manager.

Allow me to elaborate on the second point.

Many cloud-native tools utilize Custom Resource Definitions (CRDs) and Operators.

Sometimes, their version upgrade involves removing the old CRDs, and replacing them with new versions of said CRDs.

This is generally quite a tedious job.

With Flux garbage collection, this is all handled for you automatically.

For example, we've done the "complicated" upgrade from cert-manager 0.9 to 0.14 purely via Flux garbage collection.

Utilizing Flux garbage collection has undoubtedly saved us countless hours of work.

Ansible GitOps

Flux enabled us to work in a GitOps fashion with Kubernetes manifests, but we still needed a solution for Ansible. Therefore, we embarked on a mission to find a tool to enable us to work with Ansible in a similar way to Flux.

We considered Ansible Tower and the AWX operator, but they weren't really the right thing for us. In the end, we opted to recreate the synchronization loop, similar to Flux, via a periodic Jenkins job. The job is scheduled to run all Ansible tasks for each Kubernetes cluster in the infra repo every 2 hours. Implementing this was quite simple.

The only reasonably complicated task was to ensure that all playbooks were truly idempotent.

At the time of writing this article, we've successfully concluded the PoC stage of Ansible GitOps, and we are about to launch it for all our clusters.

We're hoping, with an optimistic level of confidence, that it will bring the desired benefits.

Future work

We might consider refactoring our current Ansible GitOps implementation into a Kubernetes pod, very similar to Flux in implementation, principle, and purpose.

A simple Kubernetes Cronjob could clone the infra repo, and just run Ansible in a directory belonging to that particular cluster.

As a benefit, we would obtain a concise, portable solution, thus eliminating the current reliance on Jenkins.

This could be packaged together with Flux into some sort of a standard Fury-GitOps module.

In relation to that, in the long-term future, we'll also be considering some sort of a Terraform GitOps system.

At KubeCon Europe 2021, we have noticed there are already multiple companies that utilize something similar, e.g., via a combination of Flux and ClusterAPI.

However, we don't really feel motivated enough for this, as our current non-GitOps Terraform workflow is reasonably sufficient for our needs.

Summary

Desynchronization between the real state and the desired state inside the infrastructural repository can be problematic.

We're managing to successfully eliminate such desynchronization by introducing GitOps.

Adopting Flux brought many benefits at very little cost.

We're now experimenting with a similar GitOps system for Ansible.